Learning a little bit about Mistral’s text generation capability using their Python API here. Nothing fancy. Here’s the original article on Mistral’s text generation.

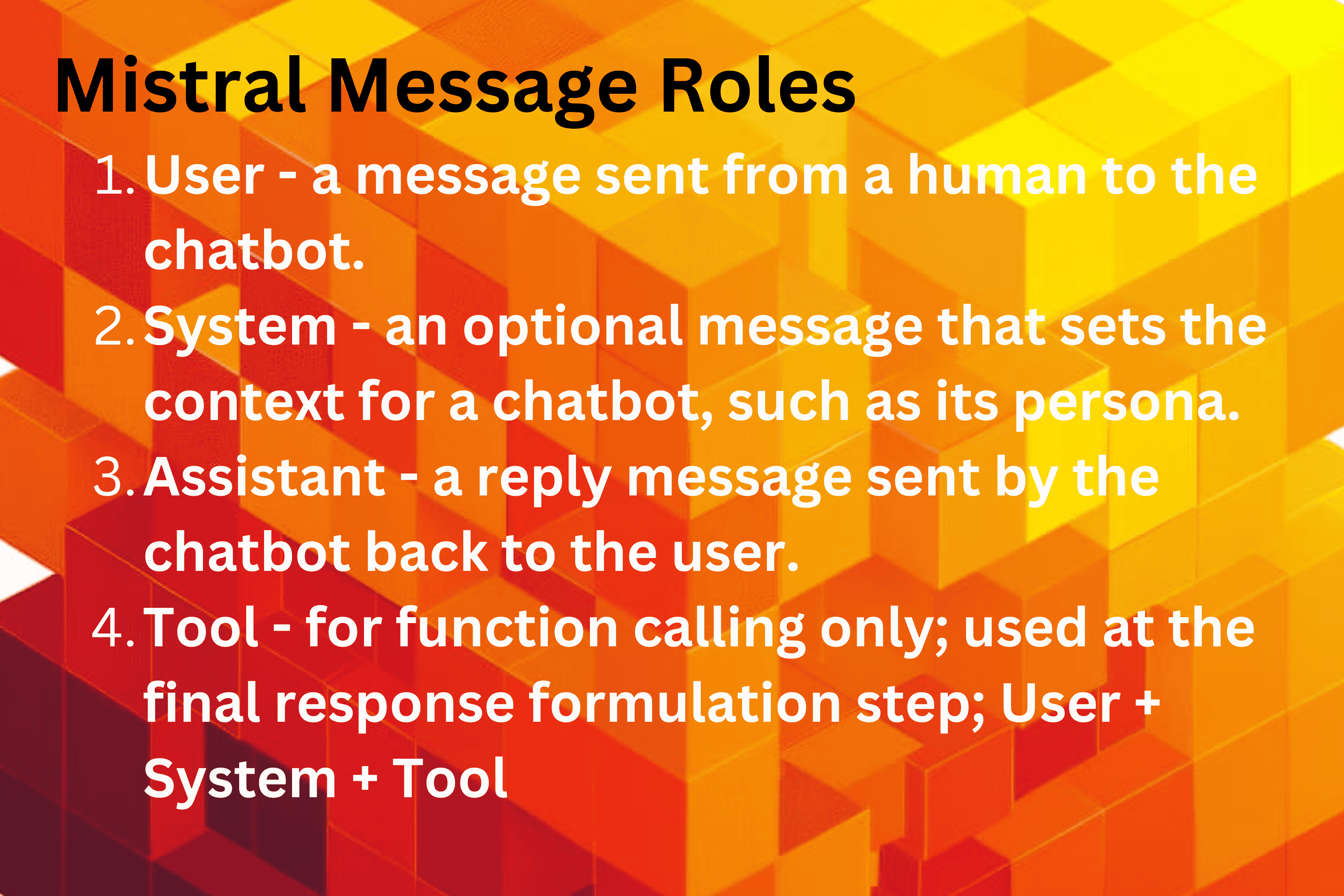

Conversing with Mistral can take different message roles:

- A user message is a message sent from a human to the chatbot. This is typically how we first interact with any chatbot.

- A system message is an optional message that sets the context for a chatbot, such as modifying its personality or providing specific instructions. For example, we often ask the chatbot to act in a specific role, such as “You are an expert in marketing and sales from a Fortune 500 company”.

- An assistant message is a message sent by the chatbot back to the user. It is meant to reply to a previous user message by following its instructions.

- A tool message only appears in the context of function calling, it is used at the final response formulation step when the model has to format the tool call’s output for the user. Learn more about Mistral’s function calling.

Setup

Let’s start with the basics. Assuming you have Python installed in the terminal and have created a project directory and corresponding virtual environment, install the necessary libraries:

> pip install mistralaiStart a Python file or Notebook. Connect to Mistral via their Python API. Feel free to modify the model version:

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

import os

api_key = os.environ["MISTRAL_API_KEY"]

model = "mistral-large-latest"

client = MistralClient(api_key=api_key)Role: User

Send a message (role = “user”) to Mistral chatbot:

messages = [

ChatMessage(role="user", content="What is the best French cheese?")

]

chat_response = client.chat(

model=model,

messages=messages,

)

print(chat_response.choices[0].message.content)Response:

France is known for its wide variety of delicious cheeses, and the "best" one can depend on personal preference. However, some of the most popular and highly regarded French cheeses include: 1. Brie de Meaux: Often referred to as the "king of cheeses," this soft cheese is known for its creamy texture and mild, slightly sweet flavor. 2. Camembert: Similar to Brie, Camembert is a soft cheese with a bloomy rind. It has a rich, buttery flavor and a smooth, runny texture when ripe. 3. Roquefort: This is a type of blue cheese made from sheep's milk. It has a strong, tangy flavor and a crumbly texture. 4. Comté: A hard cheese made from unpasteurized cow's milk, Comté has a complex flavor that is nutty, sweet, and slightly fruity. 5. Reblochon: This soft cheese has a unique history and a distinct flavor. It's creamy, slightly nutty, and has a subtle fruity taste.

Role: System

Let’s create a function to handle the conversation interface:

def chat_with_mistral(messages):

response = client.chat(

model=model,

messages=messages

)

return response.choices[0].message.contentDefine a message with a system prompt in front. In this case we are giving the chatbot a role:

# Define your messages

messages = [

ChatMessage(role="system", content="You are a helpful assistant that translates English to Italian."),

ChatMessage(role="user", content="Translate the following sentence from English to Italian: I love programming.")

]Send the messages to the chatbot:

# First turn

assistant_response = chat_with_mistral(messages)

print(assistant_response)Response:

Sure, I'd be happy to help with that! The English sentence "I love programming" translates to "Amo la programmazione" in Italian.

Role: Assistant

Here’s a simple example of using the role “assistant” in a conversation: send a message, get the response, and send a 2nd message. The response in the middle is labeled with role = assistant. Take a look:

# 1st user message

conversation = [

ChatMessage(role="user", content="Hello, can you help me translate some English phrases to Italian?")

]

# chatbot's reply

assistant_response = chat_with_mistral(conversation)

# add chatbot's reply to the conversation and label it "assistant"

conversation.append(ChatMessage(role="assistant", content=assistant_response))

# add 2nd user message

conversation.append(ChatMessage(role="user", content="Great! Please translate 'I love programming' to Italian."))

# send everything through the chatbot

assistant_response = chat_with_mistral(conversation)

print(assistant_response)

Response:

Sure! The phrase "I love programming" can be translated to Italian as "Amo la programmazione". Here's the breakdown:

* "Amo" means "I love"

* "la" is the definite article for feminine singular nouns, and in this case, it's used because "programmazione" is a feminine singular noun

* "programmazione" means "programming"

So, the literal translation of "Amo la programmazione" is "I love the programming", but in this context, it can be translated as "I love programming".

You can continue by adding the reply and a new message to the conversation.

Role: Tool

The role “tool” is used specifically in the context of function calling. it is used at the final step when the model has to format the tool call’s output for the user. Essentially, the conversation has a list of messages with these roles: “user” + “system” + “tool”. Let’s take a look at a simple example of weather lookup:

# Define a simple weather lookup function

def get_weather(city):

# In a real scenario, this would call a weather API

weather_data = {

"New York": "Sunny, 25°C",

"London": "Rainy, 15°C",

"Tokyo": "Cloudy, 20°C"

}

return weather_data.get(city, "Weather data not available")Define the tool function that would actually call the weather lookup function

# Define the function specifications

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get the current weather for a city",

"parameters": {

"type": "object",

"properties": {

"city": {

"type": "string",

"description": "The city to get weather for"

}

},

"required": ["city"]

}

}

}

]Start conversation with a message:

# Start the conversation

messages = [

ChatMessage(role="user", content="What's the weather like in New York?")

]Get the function call with this message, then add the response to the conversation. Note that this response already has the role of assistant since it’s a reply from the chatbot to the user.

# First API call to get function call

response = client.chat(

model=model,

messages=messages,

tools=tools,

tool_choice="auto"

)

# add response; this response has the role of "assistant"

messages.append(response.choices[0].message)Make sure the function call actually took place, then execute the actual get_weather function. Add the result to the conversation with role = “tool”. Note that this is the 3rd message in the messages list, and their roles are “user”, “system”, and “tool”:

# Check if a function was called

if response.choices[0].message.tool_calls:

tool_call = response.choices[0].message.tool_calls[0]

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

# Execute the function

if function_name == "get_weather":

result = get_weather(function_args["city"])

# Add the tool message with the result

messages.append(ChatMessage(

role="tool",

name=function_name,

content=result,

tool_call_id=tool_call.id

))

# Make another API call to get the final response

final_response = client.chat(

model="mistral-large-latest",

messages=messages

)

print("Final response:", final_response.choices[0].message.content)

else:

print("No function was called:", response.choices[0].message.content)Response:

Final response: The weather in New York is sunny and 25°C.

That’s all for now!